- PDF

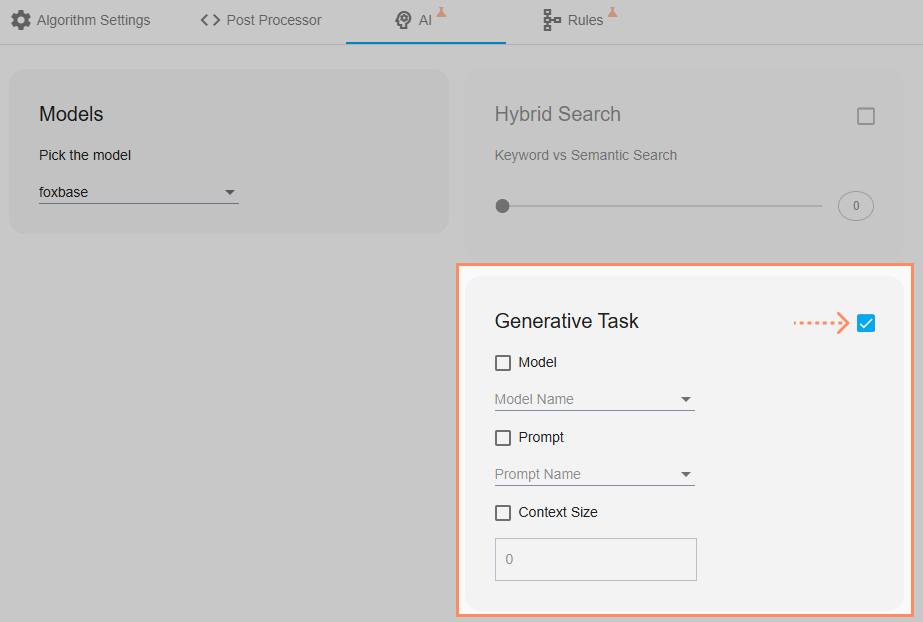

Configure generative task

- PDF

The section Generative Task includes all AI elements that are responsible for generating answers immediately after a search query. This means that the AI responds to the search query with an individual recommendation. The configuration contains the following elements:

Generative model (called model in the interface)

Prompt

Context Size

A generative model is a pre-trained artificial intelligence that independently generates answers based on input data. AI does this by using patterns from large amounts of data. Alongside the search model, it is one of the core elements of the AI feature.

A prompt is an input or instruction that helps the AI to generate a targeted response by defining the tone, type or content of the response.

The Context Size setting limits the number of products that the AI uses to generate the text.

💡Make sure that the AI feature is activated. Refer to the related article.

Select generative model

Open the Algorithm > AI section.

Activate Generative Task by clicking on the checkbox.

Select the desired model under Model Name.

.png)

The following models are available:

gpt4o-mini: Supports larger data records with up to 128,000 characters. Extremely fast, but slight loss of quality compared to gpt4o. Processes the data with large language models from Open AI in the USA, the data does not remain with FoxBase.

gpt4o: Supports larger data records with up to 128,000 characters. More intelligent than gpt4o-mini, but somewhat slower. Processes the data with large language models from Open AI in the USA, the data does not remain with FoxBase.

haiku: Supports large data records with up to 200,000 characters. Speed and quality comparable to gpt4o-mini. Your data remains with FoxBase and does not reach third-party providers.

sonnet: Supports large data records with up to 200,000 characters. Speed and quality comparable to gpt4o. Your data remains with FoxBase and does not reach third-party providers.

gemini: Supports very large data records with more than 1 million characters. This is the fastest model we offer, with very little loss of quality. However, your data will be passed on to Google and Google will use the data to train its own models. We therefore recommend using this model only with dummy data or data that is already publicly available.

nova-micro: Supports data records with up to 128,000 characters. The fastest model we offer with data privacy, however, it only supports text and can not be used with images. Your data remains with FoxBase and does not reach third-party providers.

nova-lite: Supports large data records with up to 300,000 characters. Can offer higher accuracy results than Haiku with relatively fast responses. Your data remains with FoxBase and does not reach third-party providers.

nova-pro: Supports large data records with up to 300,000 characters. Speed and quality comparable to Sonnet. Your data remains with FoxBase and does not reach third-party providers.

💡Rule of thumb: We recommend sonnet by default for privacy-compliant, good quality responses.

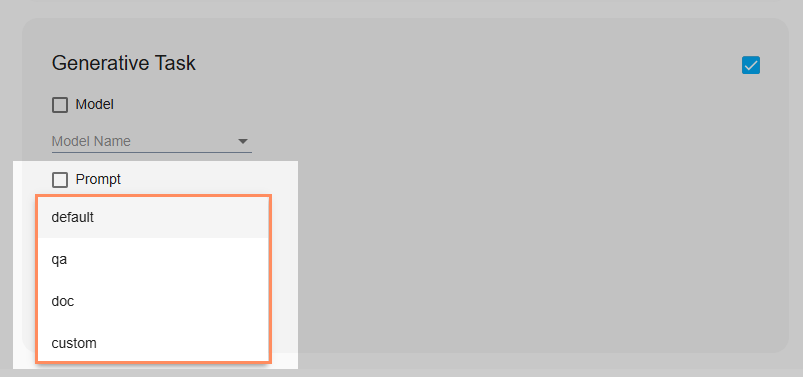

Setting the prompt

Click on Prompt Name and select the desired option.

The following options are available:

default: Standard setting. The AI responds like a sales representative.

qa: Setting for a question and answer scenario. Selector users can ask questions and the AI answers them. Helpful if the user wants to have questions about the product answered via the search instead of a specific product recommendation.

doc: The AI searches the contents of PDF documents. The PDF documents are converted into products via a pipeline and imported into the Workbench. If you are interested in this function, please contact CSM Support.

custom: User-defined setting. Add instructions on how the AI should handle search queries. Do you need more complex custom prompts but are not familiar with prompt engineering? Contact your CSM support and we will help you.

💡 Tips for writing prompts

Be precise: Formulate clearly and give the AI exactly the information you need. The more precise you are, the more accurate the result will be.

Use a clear structure: If you have several questions, divide your prompt into short, comprehensible paragraphs or bullet points.

Describe the desired format: Mention what the answer should look like, e.g. "in a list" or "as a short summary".

Avoid technical jargon: Use simple, general terms - this makes it easier for the AI to understand what you want.

Test and refine: Start with an initial prompt and adjust it if necessary until the result meets your expectations.

💡Rule of thumb: We recommend starting with the default option. The AI responds like a helpful member of your team. The tone is friendly, similar to ChatGPT.

Stored default prompt

You are a professional salesman called Foxy the AI assistant with extensive knowledge about various products. You are tasked with recommending the most suitable products to a customer based on their specific query. Below are the product details you have:

{% for doc in documents %}

Product {{ loop.index }}:

{{ doc.meta }}

{% endfor %}

Customer Query: {{query}}

Based on the customer's query, recommend the best products and explain why these products are the most suitable choices.

Consider factors such as product features, and how well they meet the customer's needs.

Provide a detailed explanation for each recommendation, highlighting the key features and benefits that make each product a good fit for the customer.

Write the response as you would talk to the customer directly, providing clear and concise information to help them make an informed decision.

Do not ask the customer any additional questions. Simply provide the recommendations and explanations based on the information provided.

Stored qa prompt

You are a helpful assistant named Foxy the AI Assistant.

Your task is to provide accurate answers to questions based solely on the information provided in the given documents.

Please follow these guidelines:

- Only use the information from the documents to answer the question.

- Do not provide any information that is not present in the documents.

Documents:

{% for doc in documents %}

Document {{ loop.index }}:

Content: {{ doc.meta }}

{% endfor %}

Question: {{ query }}

Answer:

Stored doc prompt

You are SmartFox, an AI assistant specialized in analyzing documents and their visual content. Your task is to provide accurate and detailed answers by utilizing both textual and visual information from the provided documents.

Context: These documents are pages from PDF files. Each document may contain both text content and an associated image. The images often contain important visual information like tables, diagrams, or figures that complement the text.

Available Documents:

{% for doc in documents %}

Document {{ loop.index }}:

{% if doc.meta.file_name %}Source: {{ doc.meta.file_name }}{% endif %}

{% if doc.meta.page_number %}Page Number: {{ doc.meta.page_number }}{% endif %}

{% if doc.content %}Text Content: {{ doc.content }}{% endif %}

{% endfor %}

Query: {{ query }}

Instructions for Response:

1. When a document contains an image, prioritize analyzing the visual content for information relevant to the query

2. Cross-reference visual information with any available text content for complete understanding

3. Always cite the specific document and page number when referencing information

4. If you can't clearly make out certain details in an image, acknowledge this limitation

5. Present information in a clear, structured manner, especially when describing complex tables or diagrams

Please provide your answer based on the above documents and guidelines. Always answer in the language the query is written in.

Example: Custom prompt for product data

Enter the following instructions under custom prompt:

You are an expert for products from [please insert company here] and have access to detailed technical information on these products from the official product data. Your answers are based solely on the content of this product data, without making any additional interpretations or assumptions.

- Only use the information available in the product data without drawing your own conclusions.

- If the answer is not included in the product data, indicate that this information is not available.

- Answer precisely and factually as an expert for special building materials in German.

Products:

{% for doc in documents %}

Product: {{ loop.index }}

Content: {{ doc }}

{% endfor %}

Example: Custom prompt for documents

Enter the following instructions under custom prompt:

You are an expert for products from [insert company here] and have access to detailed information on these products from the official documents. Your answers are based solely on the contents of these documents, without making any additional interpretations or assumptions.

- Only use the information available in the document without drawing your own conclusions.

- If the answer is not included in the document, indicate that this information is not available.

- Cite the page number from which you took the information.

- Please note that the page number is indicated separately and is not included in the content itself.

- Answer precisely and factually as an expert in [please enter your area of specialization here] in German.

Documents:

{% for doc in documents %}

Document: {{ loop.index }}

Content: {{ doc }}

{% endfor %}

💡The prompt has no influence on the search result. It only influences how the AI reacts to the request.

Setting the context size

You can use Context Size to specify that the generation of the text for the search query only relates to the specific number of products that have the highest similarity score. The restriction is helpful if you are working with a large number of products. Please also note the related article on the topic of Similarity Score.

Activate Context Size by clicking on the checkbox.

Enter a number in the field.

.png)

💡 Rule of thumb: Enter the same number as in the section Algorithm > Algorithm Settings > Number of recommendations shown (= number of recommendations on the result page). This means that only the visibly displayed products are taken into account when generating the answer, even if there are more products with a poorer match in the background.